About Me

I received my Ph.D. degree from the School of Computer Science and Engineering at Beihang University, China. I completed my doctoral studies under the supervision of Prof. Wenge Rong and Prof. Chuantao Yin.

My research primarily focuses on building Trustworthy AI systems. My current research themes include:

- Investigating and Improving the Faithfulness of LLMs.

- Exploring and Enhancing the CoT Monitorability of LRMs.

- Understanding the Internal Workings of LLMs through Mechanistic Interpretability.

Warning

Problem: The current name of your GitHub Pages repository ("Solution: Please consider renaming the repository to "

http://".

However, if the current repository name is intended, you can ignore this message by removing "{% include widgets/debug_repo_name.html %}" in index.html.

Action required

Problem: The current root path of this site is "baseurl ("_config.yml.

Solution: Please set the

baseurl in _config.yml to "Education

-

Beihang UniversityPh.D. in Computer ScienceSep. 2020 - Nov. 2024

Beihang UniversityPh.D. in Computer ScienceSep. 2020 - Nov. 2024

Employment

-

BIGAI NLCo LabAssistant ResearcherDec. 2024 - present

BIGAI NLCo LabAssistant ResearcherDec. 2024 - present

News

2025

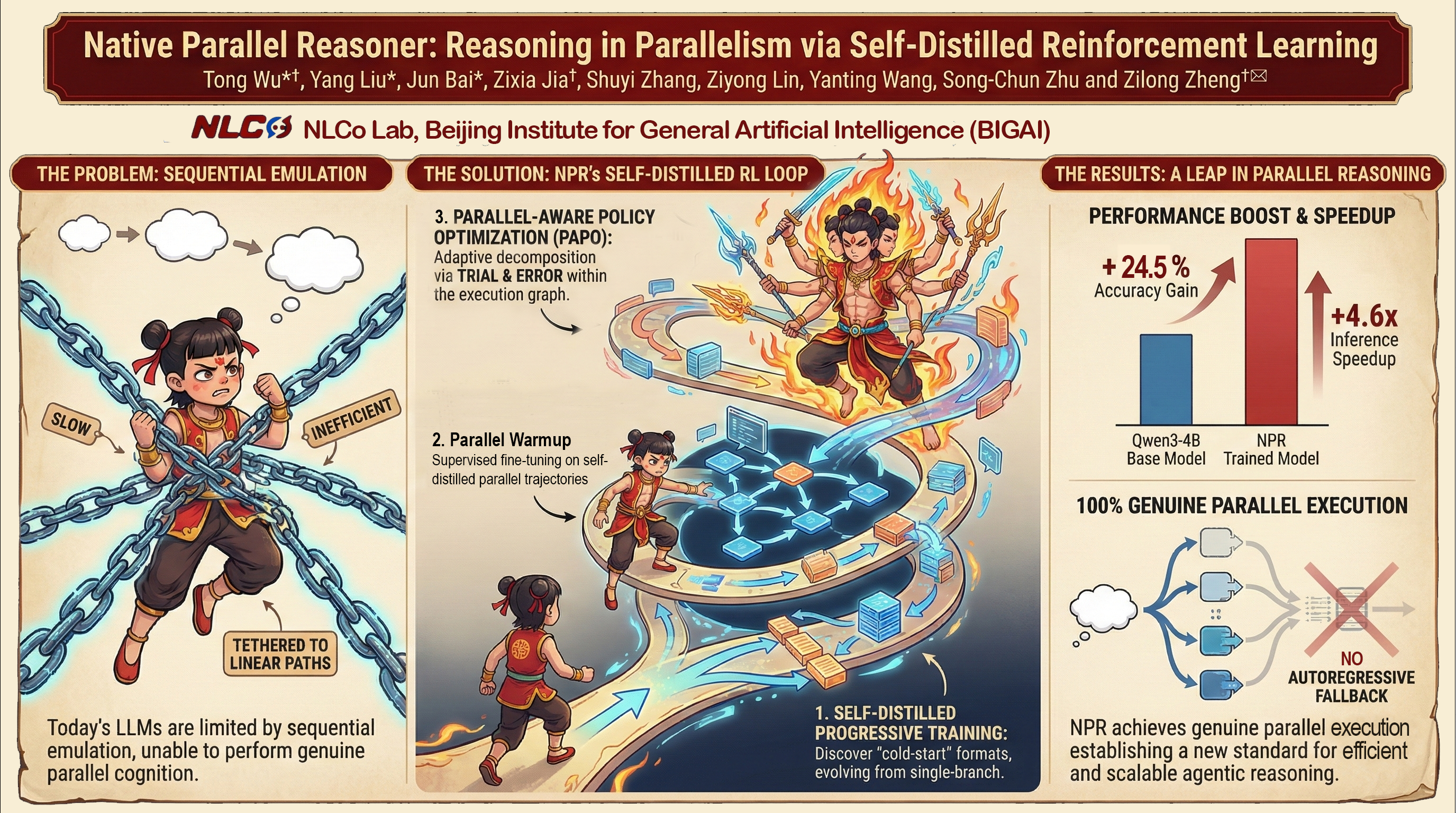

🚀 We are excited to announce the release of the Native Parallel Reasoner.

Dec 09

Aug 20

CLG has been accepted by ACL 2025.

May 15

Selected Publications (view all )

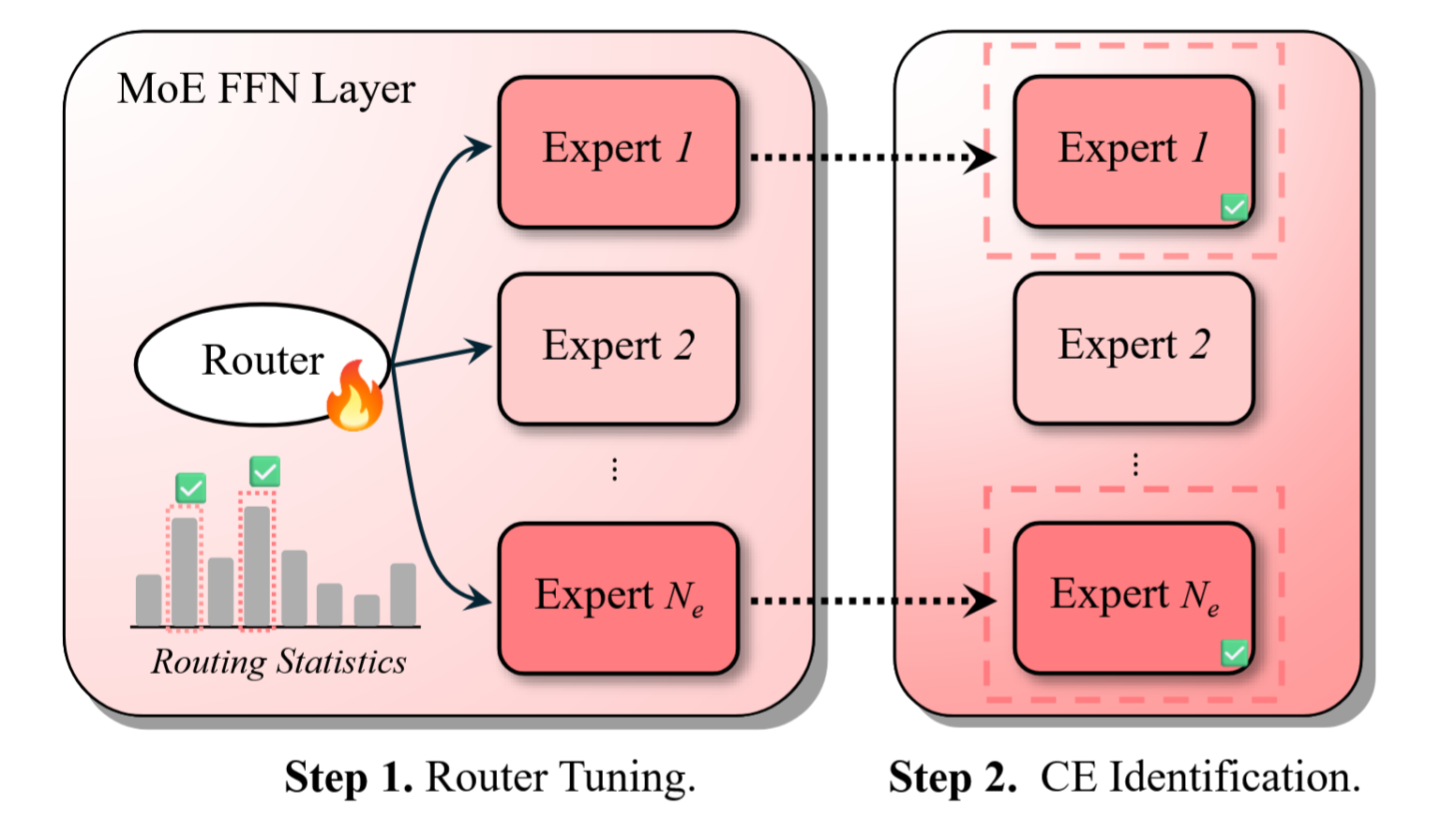

Understanding and Leveraging the Expert Specialization of Context Faithfulness in Mixture-of-Experts LLMs

Jun Bai, Minghao Tong, Yang Liu, Zixia Jia, Zilong Zheng

EMNLP 2025 Top 2%

Understanding and Leveraging the Expert Specialization of Context Faithfulness in Mixture-of-Experts LLMs

Jun Bai, Minghao Tong, Yang Liu, Zixia Jia, Zilong Zheng

EMNLP 2025 Top 2%